Challenges

Pelmorex Corp., a Canadian weather information and media company wanted to modernize their data and analytics infrastructure to enable a single source of truth across the enterprise. They were looking for a scalable solution to ingest data from multiple sources onto a single platform, process it with little or no manual intervention, and make it available for daily consumption.

Key requirements:

- Move away from SSIS and SQL server (their primary data processing tools) which required a lot of manual intervention

- Establish a centralized repository of business logic to facilitate easy analysis

- Migrate existing and new data sources like Google Apps Manager, Google Analytics, NetSuite, WideOrbit, and Brightcove to a modern data platform for near-real-time analytics and reporting

- Easily add new data sources to their data lake and warehouse

- Optimize cost, time, resource utilization and enhance productivity using automation

- Ensure data security and compliance as per organizational policies

- Migrate Excel-based reports to Power BI and enable data visualization on the modern data platform

Ensured >99.99% availability of components with geo-replication on AWS

Solution

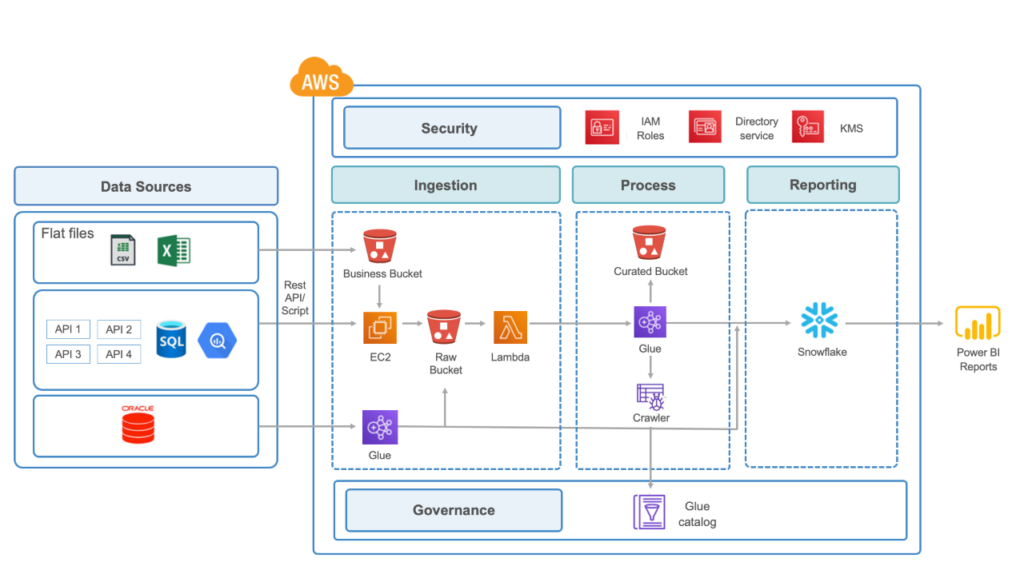

The Impetus team implemented and delivered an end-to-end modern data analytics platform on the cloud. To create data pipelines, Amazon EC2 scripts were used to ingest around 10GB data daily from multiple data sources across Python API, Amazon S3, Big Query, etc. and store them in S3. To trigger a new job every time a file is loaded in S3, Lambda functions were configured. Further, AWS Glue was used for data transformation, which allowed the creation of multiple parallel jobs and helped ingest and process data faster.

As the media house required a scalable, cost-effective data warehouse, Snowflake on AWS was used, which supports JSON-based functions, offers instant scaling, and automated maintenance. For BI, Power BI was leveraged, which consumed data from Snowflake for data visualization and analytics reporting. DevOps helped in streamlining code deployment in AWS.

To ensure data security and compliance, the solution:

- Ensured AWS access via single sign-on

- Used IAM for access management

- Encrypted data at rest and in transit

- Enabled Snowflake role-based access control to all the tables and schemas

- Created VPN, public, and private subnets

- Created NAT Instance for accessing the external network

A high-level functional component architecture of the solution is given below:

50% compute cost reduction by using AWS Glue for data transformation

Impact

Migrating the data warehouse from SQL to AWS seamlessly ensured >99.99% availability of components. It enabled parallel processing, which in turn reduced compute cost by 50%, and reduced execution time for processing raw data by 50%. Snowflake on AWS also improved data visibility for the media house, enabling them to perform ML-based predictive analytics and generate business insights.