Challenges

A US-based healthcare services provider wanted to revamp its analytics approach by consolidating data warehouse, ETL, and BI on the cloud to increase speed and flexibility. They wanted to move their CDH-based data lake to Snowflake on the cloud to ensure easy data availability and create a single source of truth across the organization.

Requirements

The healthcare services provider was looking for a future-proof, comprehensive, and flexible data warehouse to process data faster and deliver analytics right to the patient. They wanted a solution that would:

- Reduce total cost of ownership by enabling data share across regions

- Support data ingestion from a variety of relational databases like Oracle, MS SQL, PostgreSQL, MySQL, and SAP Hana

- Support batch ingestion with bulk and incremental load capabilities for multiple data formats

- Maintain high data quality across the data lifecycle

20+ TB data ingestion from 41+ source systems including 25000+ tables

Solution

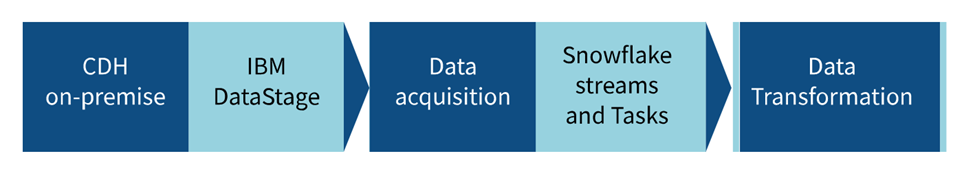

The Impetus team followed a 4-step approach to move the client’s data warehouse to Snowflake.

Data Ingestion

IBM DataStage and Snowflake SQL workflows were used to ingest data from CDH to Snowflake. To reduce the consumption of billing credits for computing and ensure faster data ingestion, JDBC inserts were replaced by Snowflake’s bulk load feature.

Data Curation

A data acquisition tier was developed on IBM DataStage to extract and load data from CDH on-premise. Snowflake Streams and Tasks cleansed and prepared the data for transformation. Splitting the cleansing and preparation process helped to handle failovers, where a single process was re-executed in case of failure.

The solution also used:

• Snowflake Merge to update, delete, and insert data to target Snowflake tables

• Azure Private Link to access Snowflake Virtual Network with defined authorization policies

• Snowflake end-to-end encryption for data in motion

Data Validation

The solution used a Snowflake SQL-based data validation component to check data quality. Data validation involved:

- Comparing record counts during ingestion start time with the target table

- Matching aggregate results on a numeric field with the source

- Matching metadata at the source with the target Snowflake table

Data Consumption

The validated data, residing in the eastern US, was securely shared with consumers in western Europe using Snowflake Data Share. This helped avoid geo-replication of data, enabled granular control to share only what is required, and reduced latency.

$500K annual savings and 60% reduction in overall ingestion time

Impact

The solution reduced the transformation effort of the existing team from one year to one month, lowered ingestion time by 60%, and led to $500K annual savings. It also ensured the availability of high-quality data across the organization for advanced analytics.