01

Business needs

One of the major American airlines wanted to move their Bag Manager application from Teradata to AWS to:

- Reduce baggage data processing time for immediate consumption and analytics

- Add more checkpoints for bags in transit, for example, scanned, added to storage, loaded/unloaded, etc.

- Mobilize airlines’ ground staff for better management of scheduled flights

- Track changes in the state of the bags

Processed ~60 multi-level nested JSON messages per second with an average size of 80 KB

02

Solution

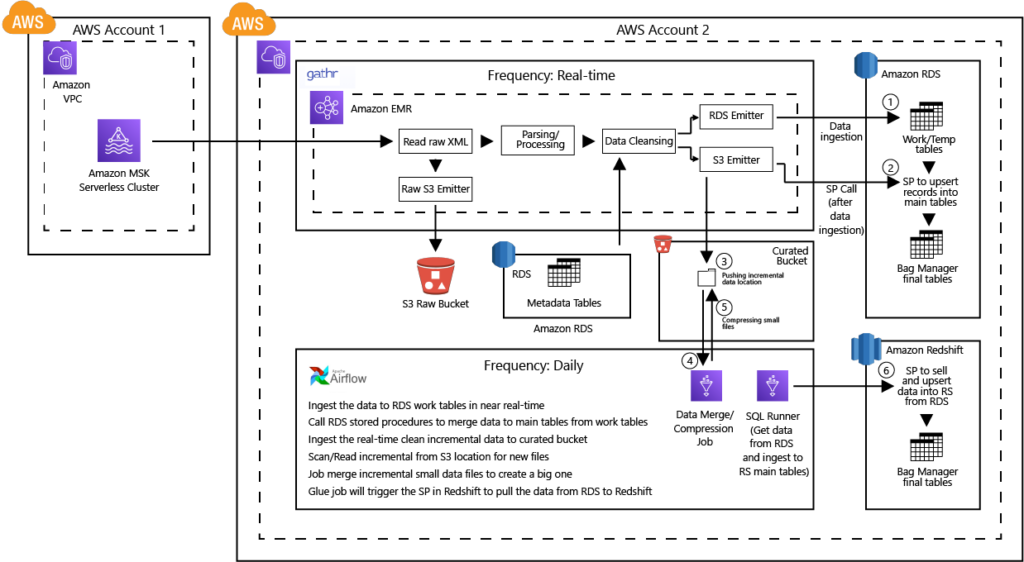

The Impetus team leveraged Amazon Managed Streaming for Kafka (MSK) to process ~60 multi-level nested JSON messages per second with an average size of 80 KB. The solution used the all-in-one data pipeline platform Gathr for consuming data from Amazon MSK. Apart from processing 20 GB of data per day with ~1,500,000 records, Gathr also helped to:

- Parse and process the message to a structured format for the consumption layer

- Implement CDC with SCD type 1 strategy

A high-level solution architecture diagram is given below:

Highlights

- Designed and implemented a separate pipeline to move historical data to Redshift for deep analytics

- Used hash function at row level to improve SCD-1 performance

- Implemented a utility to merge and compress the small files generated by Gathr on S3

- Employed Gathr health checks to monitor data anomalies and duly report to the users

Reduced baggage data processing time from 20 minutes to real-time

03

Impact

Migrating the baggage tracking application to Amazon MSK helped the airline to:

- Reduce baggage data processing time from 20 mins to real-time for immediate consumption and analytics

- Save cost and time by routing baggage to the right destination in case of misconnects or itinerary change

- Resolve issues quickly in case of misconnects/itinerary changes/flight delays

- Enhance customer experience with real-time baggage tracking